If (at least) two people code the same document independently from each other while using an identical code system, you can use MAXQDA to check how similar codes were placed in the data. This feature is called the “Intercoder agreement” and offers different ways to calculate correlation of codes, including calculating the intercoder coefficient Kappa. Calculating the intercoder agreement in qualitative analysis mostly serves to improve coding instructions and individual codes.

How to prepare for intercoder agreement calculation

To calculate intercoder agreement you need a MAXQDA project that contains the document(s) that are to be included in the analysis twice. One version coded by team member A, the other coded by team member B. Both documents have to be coded using an identical code system. The easiest way to set up your data for this is by following these steps:

1. Set up your MAXQDA project

Import the document that will to be coded by the team member. Add all codes that are going to be used to the code system. This is the project that will be used by team member A.

2. Duplicate your MAXQDA project

Duplicate your project to create a second version, which will be used by team member B. Open the duplicated project and change the name of the document to reflect that is is a second version, e.g. “Interview 1 (team member B)”. Send this project file to the other team member.

3. Team members code on their own

Both team members code the document on their own in their own project file.

4. Merge both projects

Open one of the projects and select “Project > Merge projects”. Select the other project file and merge them into on. You now have one MAXQDA project file that contains both documents.

Once you have both documents in one project file you are ready to check for intercoder agreement.

Check for intercoder agreement

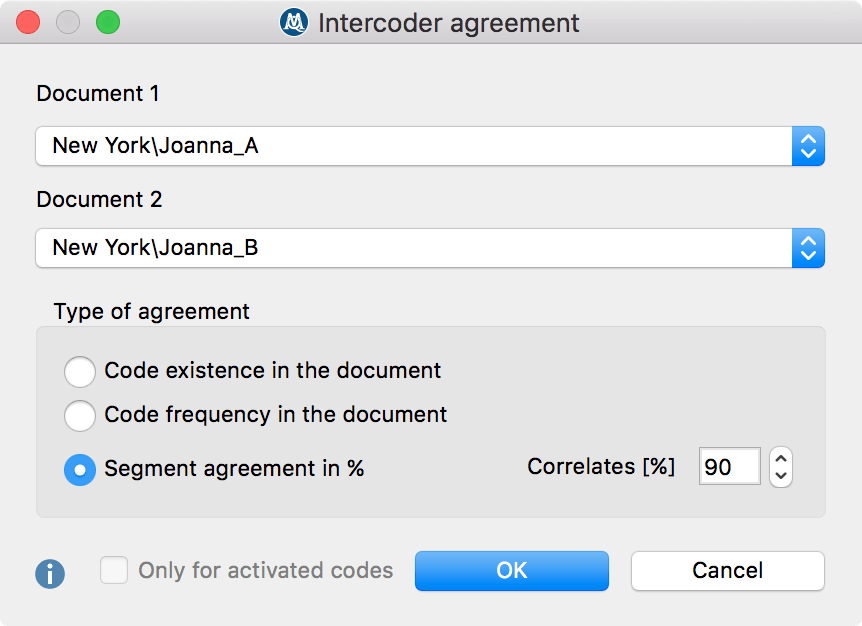

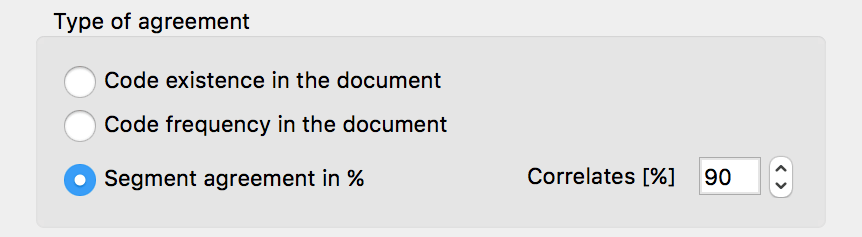

You can now start the Intercoder Agreement function by selecting it “Analysis > Intercoder agreement”. Select the two documents in question from the dropdown menu unter “Document 1” and “Document 2”. There are three types of agreements available.

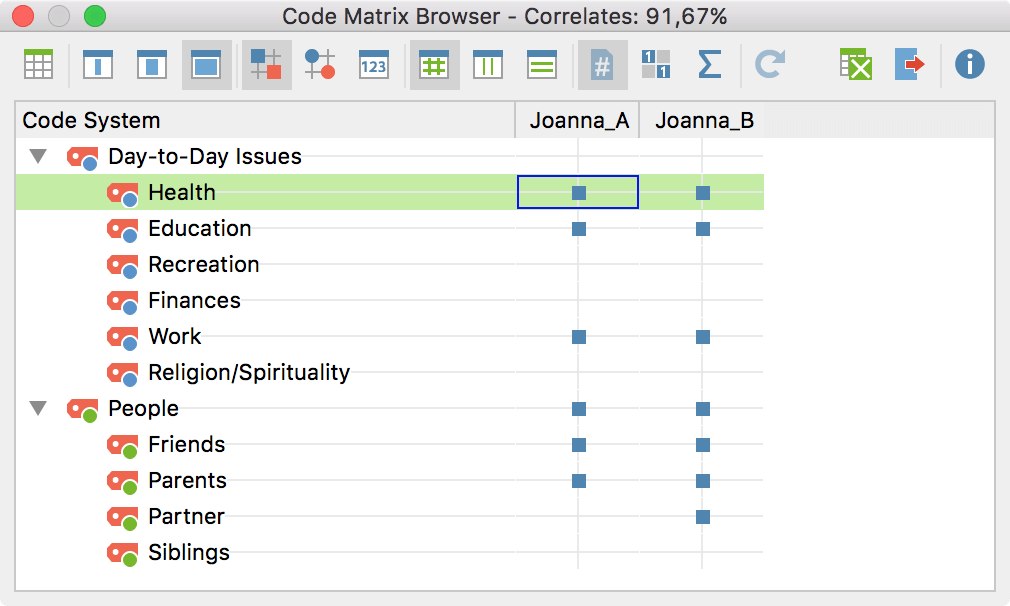

1. Code existence in the document

If you select this option MAXQDA will check if the same codes were used at least once in each of the documents. This variation is most appropriate when the document is short, and there are many codes in the “Code System.” The result of this intercoder agreement check is a Code Matrix Browser that displays both documents and the code system. If a code appears in a document it is displayed as a clue square or circle.

The screenshot shows the results with a high correlation of existing codes. The two team mebers differ only in the use of the code “Partner”.

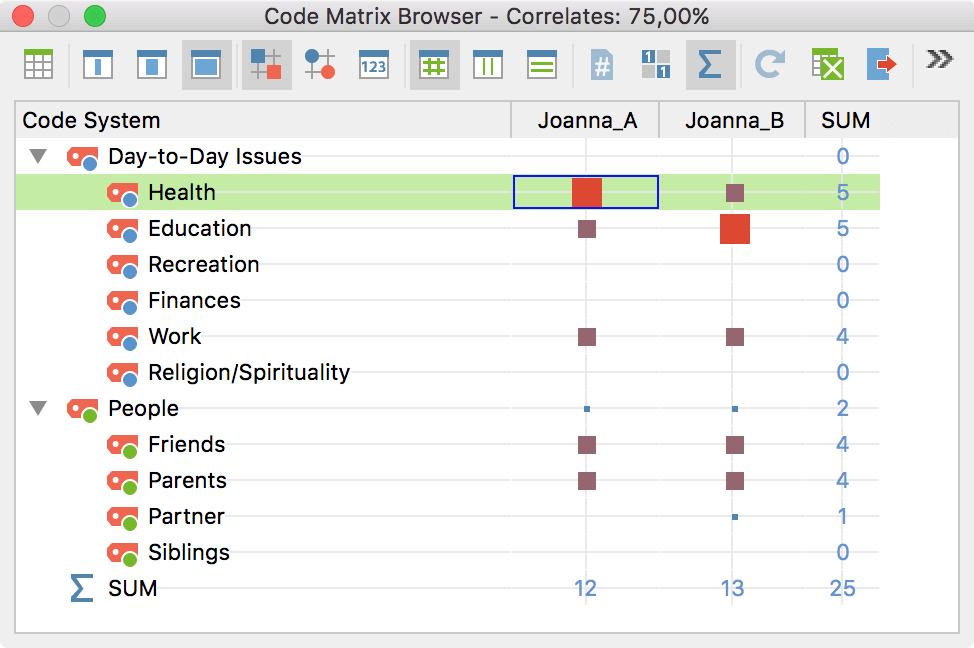

2. Code frequency in the document

This option analzes the documents in more detail and checks if the same codes were used the same amount of times in each of the documents. A correlation percentage is shown at the top of the results windows.

3. Segment agreement in %

In this option you can decide that the coded segments are considered an “agreement” even if the codes were not placed 100% equally. The default value is 90% – which means segments are considered as equally coded, even if they are a little bit – up to 10% – different. If you want to be more or less strict, you can enter a different percentage.

Two result tables are created. The first one displays the correlation percentage for each code. You can see how many times a code appears and how many of these times the codes are in agreement in both documents. This makes it easy to identify which code might need a better definition and which codes have been used in the same way by each team member. In the screenshot above both team members used the “Friends” code very similarly, but differ in their use of “Parents”. Only one team member used the code “Partner”.

The second result tables lists which coder coded which segment and whether the segments correlate. The red or green icon in the front makes it easy to identify which segments were coded the same/differently. Click on the box under “Document 1” or “Document 2” and the Document Browser window jumps to the corresponding passage, where you can then discuss these passages with each other.

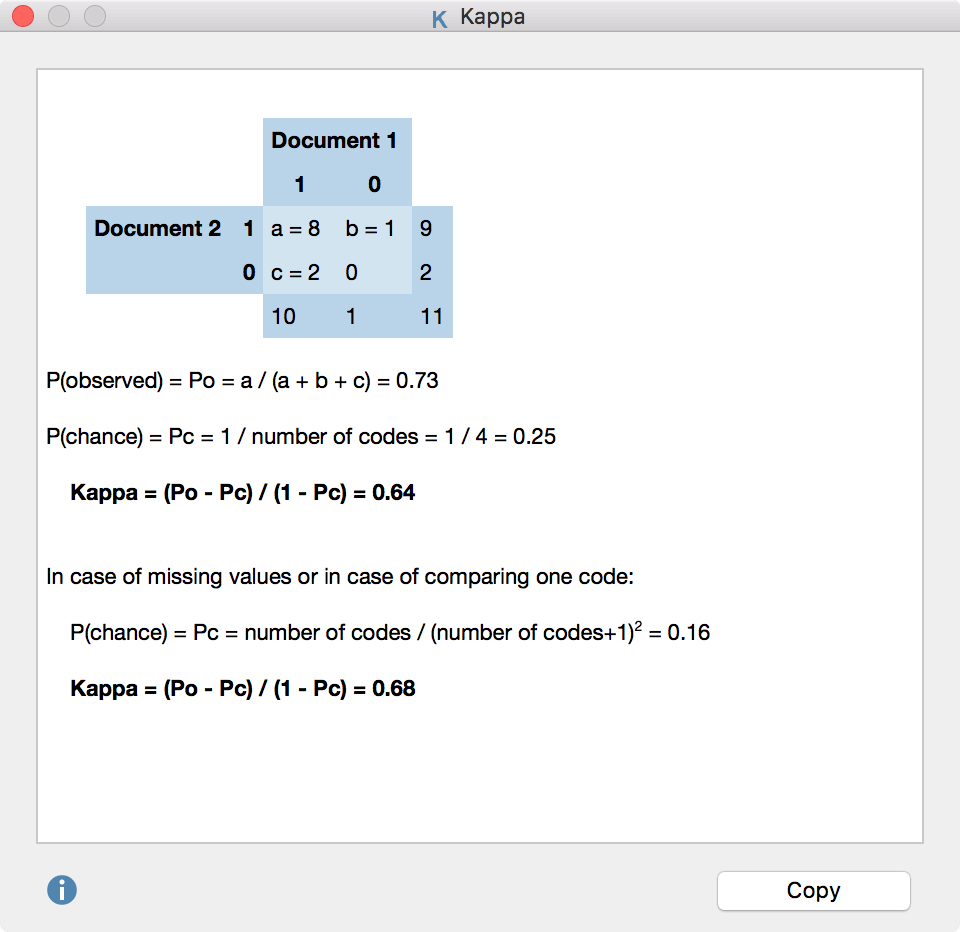

Calculating Coefficient Kappa

Some researchers might want to calculate chance-corrected coefficients. The basic idea of such a coefficient is to reduce the percentage of agreement by the amount of aggreements that would be obtained in a random assignment of codes to segments. In MAXQDA, the commonly used coefficient “Kappa” can be used for this purpose: In the results table, click on the Kappa symbol to begin the calculation for the analysis currently underway. MAXQDA will calculate and display the intercoder coefficient Kappa.