Guest post by Natalie Nóbrega Santos, Vera Monteiro & Lourdes Mata – Centro de Investigação em Educação, ISPA – Instituto Universitário.

What is assessment? “…[it] is to give grades because good grades lead to a good job, to have money and then a good life” (3rd-grade student). The way students perceive assessment is critical because their beliefs will guide and determine the strategies they use for their studies (Brown & Harris, 2012; Brown & Hirschfeld, 2007). What teachers think about assessment is also essential because it guides how they implement assessment in the classroom (Barnes, Fives, & Dacey, 2017; Brown, 2008; Brown, Kennedy, Fok, Chan, & Yu, 2009; Opre, 2015; Vandeyar & Killen, 2007).

Background: What do elementary school teachers and students think about mathematics assessment?

Teachers and students have their own ideas about assessment that converge in some ways but not in others. These conceptions can have an impact on their behavior (e.g., teaching and learning strategies) and on the affective elements of learning (e.g., motivation). Our study was part of a research project that aimed to explore how teachers’ and students’ conceptions of assessment and teachers’ assessment practices were related to students’ outcomes. Particularly in the present study, we sought to determine how elementary Portuguese teachers and students perceive assessment in mathematics and where they agree. We used MAXQDA’s tools to help us in our research process.

Research Data

Five teachers and their 82 third-grade class students (A, B, C, D, and E) from four different schools participated in our study. We collected data through individual interviews with the five teachers and focus group discussions with their students (two groups for Class D, three for Classes B and A, and four for Classes C and E).

The conversation addressed five assessment topics for the teachers:

- assessment definition,

- targets,

- purposes,

- practices, and

- criteria,

and three for the students:

- definition,

- purposes, and

- practices.

Data Analysis with MAXQDA

The coding process

We performed a content analysis using MAXQDA. We coded the interview and focus group verbatim transcripts into fragments describing participants’ different experiences regarding assessment in mathematics. We used both deductive and inductive approaches. Starting with the categories previously described by Brown (2008), we applied a cyclical process redefining these categories to match them as representative of the reality of our participants (Miles, Huberman, & Saldaña 2014).

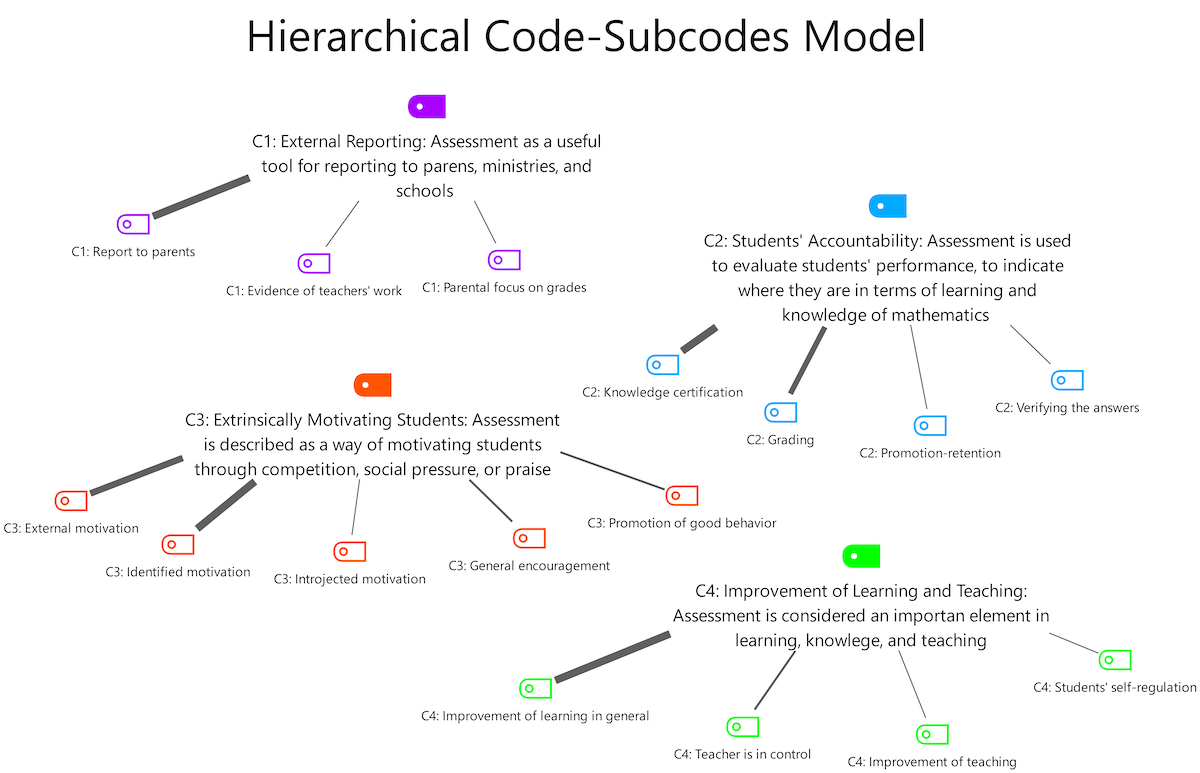

We also defined subcategories to enrich the analysis. Four qualitatively-different categories of assessment conceptions were identified:

- external reporting,

- students’ accountability,

- external motivation of students, and

- improvement of learning and teaching.

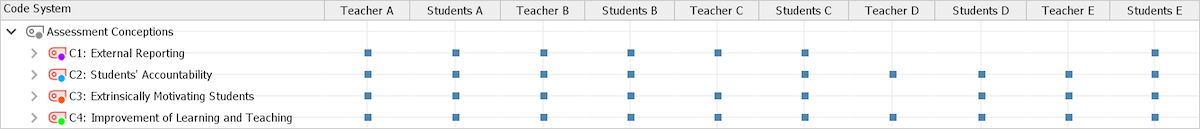

Figure 1 presents a concept map created with MAXQDA’s MAXMaps feature with the final hierarchical code-subcode system. The widths of the linking lines indicate the frequency at which codes were assigned in the participants’ discourse.

Figure 1. Visualization of the Code System using MAXMaps’ Hierarchical Code-Subcodes Model

Visualizing our results

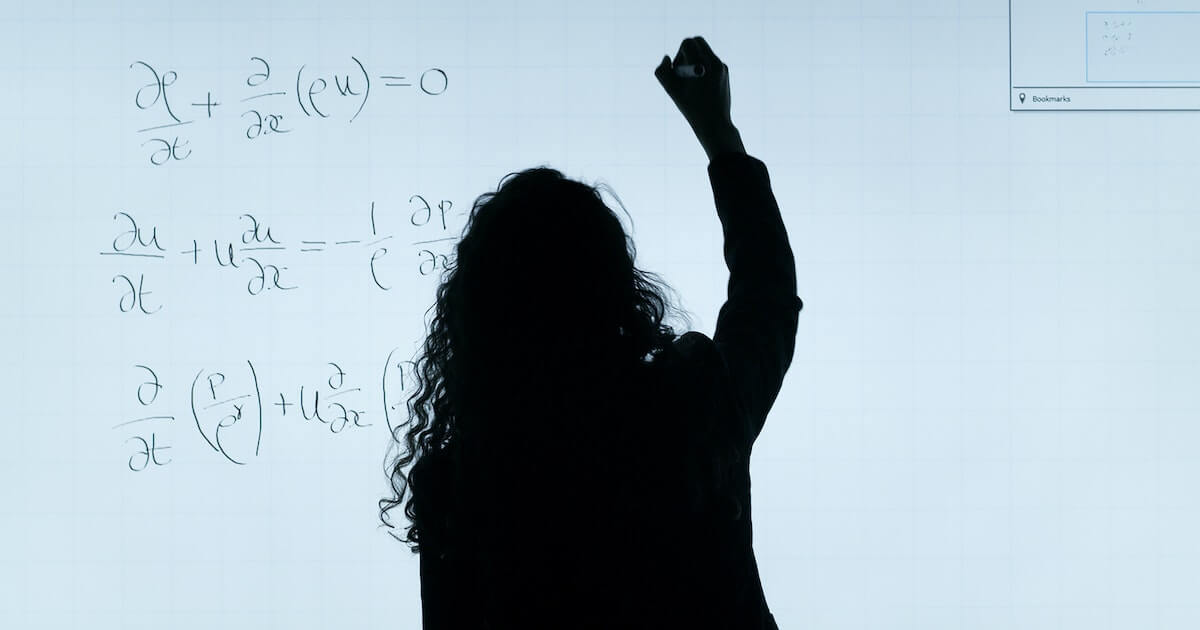

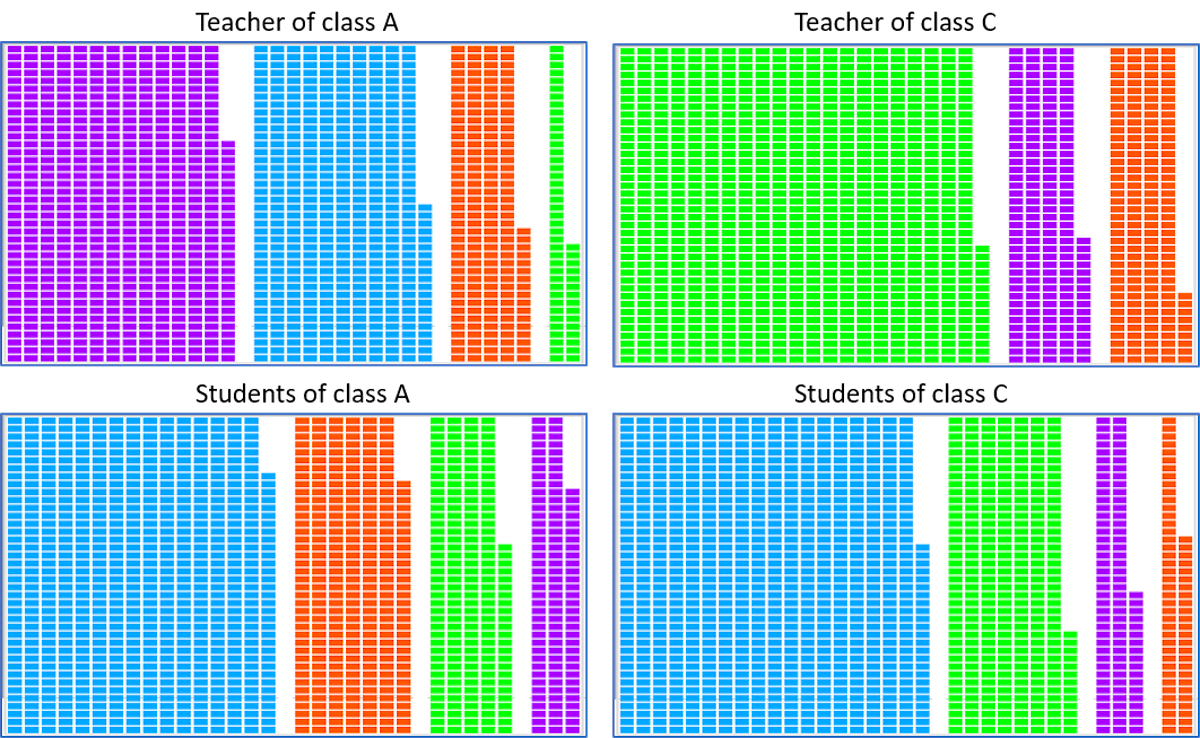

No clear difference between participants can be noted at this point because teachers and students seem to hold conflicting beliefs about assessment.

Figure 2. Visualization of the categories and subcategories mentioned by each teacher and his/her students using MAXQDA’s Code Matrix Browser

Deepening the analysis

As we sought a more direct and precise analysis of the assessment conceptions of the participants, we used several MAXQDA functions to identify the most dominant categories for each teacher and his/her students. We applied MAXQDA’s Document Portrait to visualize the category most often mentioned by the participant(s), which we think reflects the one they found most important (Figure 3).

Figure 3. Visualization of the category most often mentioned by teachers and students from Classes A and C using MAXQDA’s Document Portrait

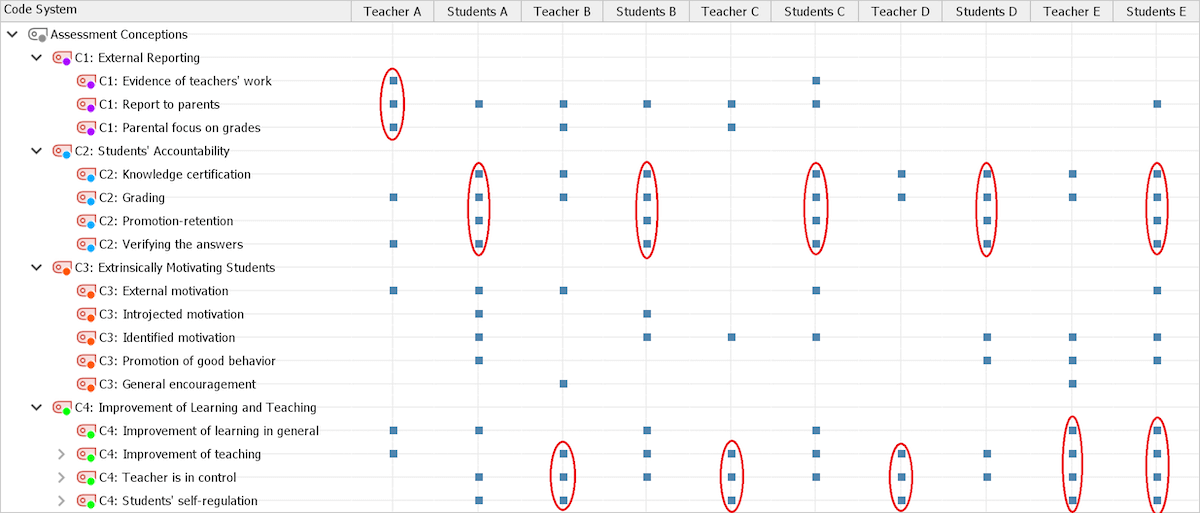

We employed the Code Matrix Browser to visualize the category that the participants could break down into the maximum number of aspects (or subcategories) as the richness of the content addressed by the participants reflects how deeply they understood the category (Figure 4).

Figure 4. Visualization of the category that the participants most deeply understood using MAXQDA’s Code Matrix Browser

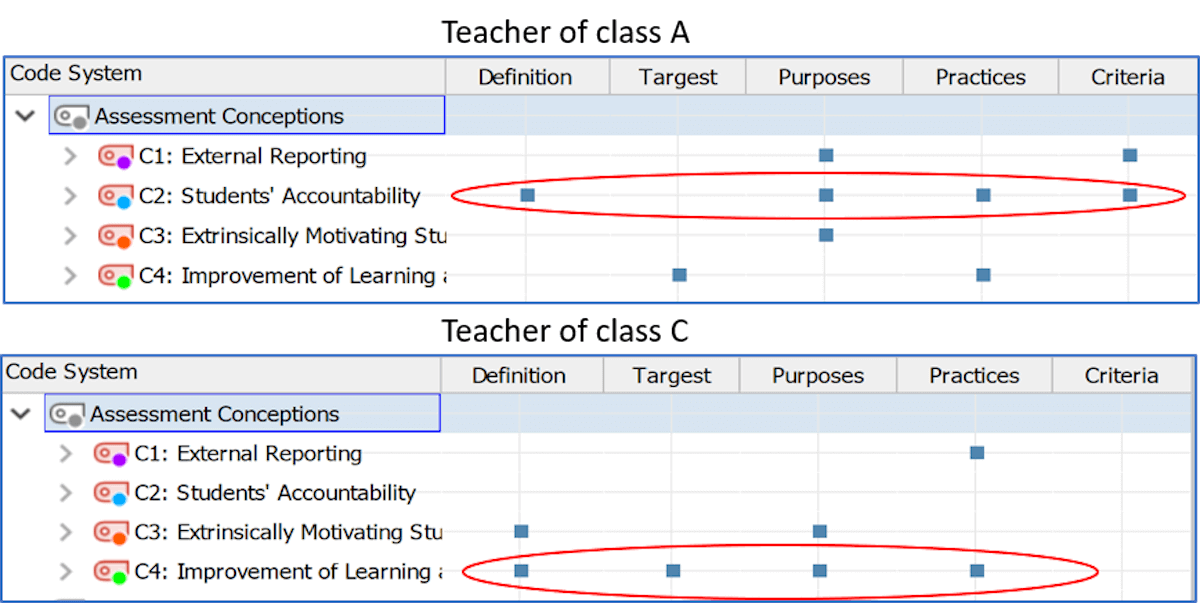

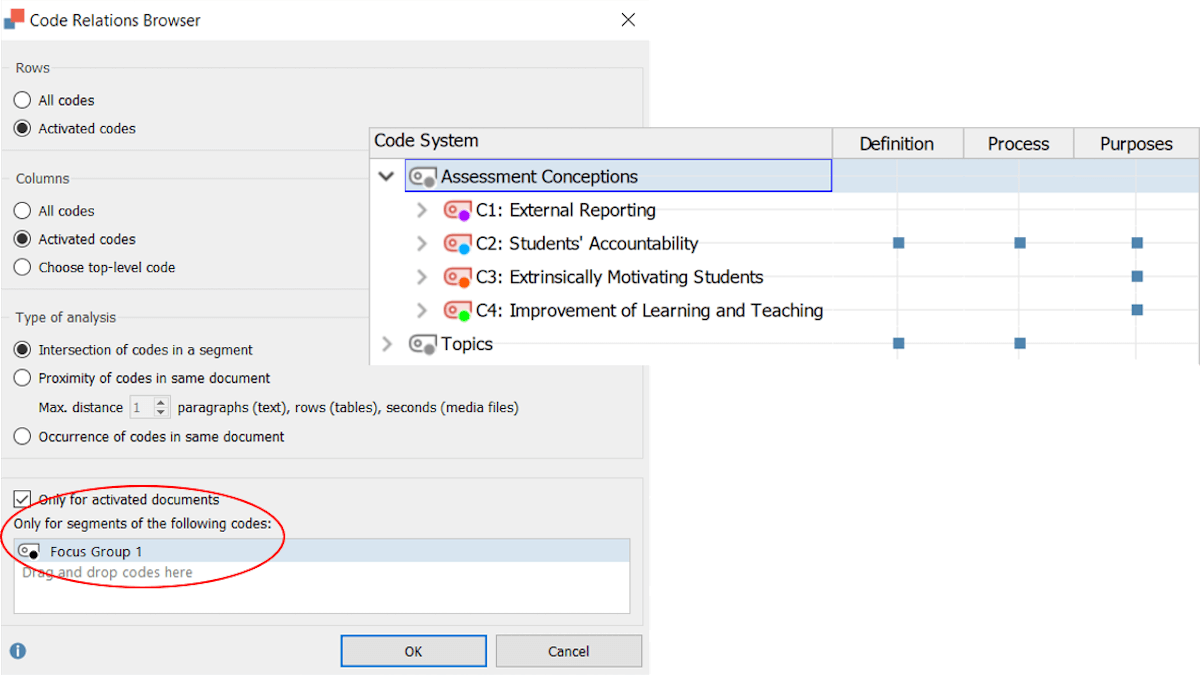

Finally, we utilized MAXQDA’s Code Relations Browser to visualize the category with the most consistent presence in the interview/focus group discussion, which is the one mentioned through all the topics of conversation (Figure 5).

Figure 5. Visualization of the category with the most consistent presence in the interview of teachers from Classes A and C using MAXQDA’s Code Relations Browser

Since we had more than one focus group per teacher, we assessed the consistency of the results of each criterion by observing each focus group separately. Using the feature from the Code Relation Browser, “Only for segments of the following code,” we limited the search to segments of one focus groups at a time, as shown in Figure 6. We only considered a category as dominant for the students of a class if it was mentioned by at least 50% of the focus group.

Figure 6. Visualization of the category with the most consistent presence in one of the focus groups of Class A using MAXQDA’s Code Relation Browser features.

Results and Conclusions

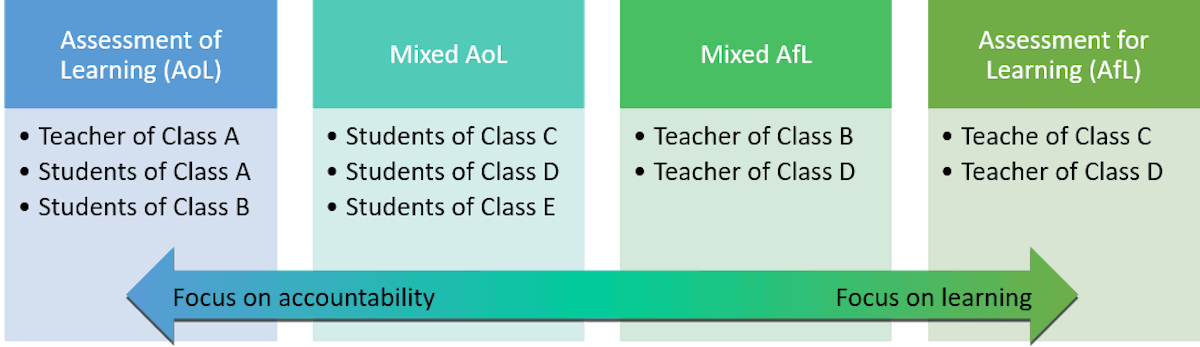

MAXQDA’s visual tools allowed us to recognize four different comprehensive conceptions of assessment, ordered along a continuum ranging from the assessment of learning pole (AoL, greater focus on certification and accountability) to the assessment for learning pole (AfL, greater emphasis on improving learning and teaching) (Figure 7).

Figure 7. Description of the comprehensive conceptions of teachers and students

The results indicate an inconsistency between students’ and teachers’ assessments of dominant conceptions (students predominantly at the AoL pole of the continuum and teachers at the AfL pole). We believe that this disparity may be due to inconsistencies between teachers’ conceptions and assessment practices.

This study was supported by the FCT – Science and Technology Foundation -Research project PTDC/MHC-CED/1680/2014 and UID/CED/04853/2016

References

Barnes, N., Fives, H., & Dacey, C. M. (2017). U.S. teachers’ conceptions of the purposes of assessment. Teaching and Teacher Education, 65, 107–116. https://doi.org/10.1016/j.tate.2017.02.017

Brown, G. T. L. (2008). Conceptions of assessment: Understanding what assessment means to teachers and students. New York, NY: Nova Science Publishers.

Brown, G. T. L., & Harris, L. (2012). Student conceptions of assessment by level of schooling: Further evidence for ecological rationality in belief systems. Australian Journal of Educational and Developmental Psychology, 12, 46–59.

Brown, G. T. L., & Hirschfeld, G. H. F. (2007). Students’ conceptions of assessment and mathematics: Self-regulation raises achievement. Australian Journal of Educational Developmental Psychology, 7, 63–74.

Brown, G. T. L., Kennedy, K. J., Fok, P. K., Chan, J. K. S., & Yu, W. M. (2009). Assessment for student improvement: Understanding Hong Kong teachers’ conceptions and practices of assessment. Assessment in Education: Principles, Policy & Practice, 16(3), 347–363. https://doi.org/10.1080/09695940903319737

Miles, M. B., Huberman, A. M., & Saldaña, J. (2014). Qualitative data analysis: A methods sourcebook (3rd ed.). Thousand Oaks: Sage.

Opre, D. (2015). Teachers’ conceptions of assessment. Procedia—Social and Behavioral Sciences, 209, 229–233. https://doi.org/10.1016/j.sbspro.2015.11.222

Vandeyar, S., & Killen, R. (2007). Educators’ conceptions and practice of classroom assessment in post-apartheid South Africa. South African Journal of Education, 27(1), 101–115.

About the Authors

Natalie Nóbrega Santos is a PhD student at the ISPA – Instituto Universitário. She graduated with a degree in Educational Psychology from the University of Madeira. Her research interest fields are academic assessment, the affective components of achievement, grade retention, and social and emotional learning. Her present research project is titled “Second-grade retention: Academic, cognitive, and socio-affective aspects involved in decision making.”

Vera Monteiro did her degree and master’s degree in Educational Psychology at the ISPA – Instituto Universitário and her PhD in the same domain at the University of Lisbon. She has been a professor at ISPA-Instituto Universitário since 1991. Her research interests are Assessment and Feedback and their relation to learning. She investigates how assessment and feedback processes can optimize learning. These may be through affective processes, such as motivation, engagement, self-perception competence or/and through several different cognitive processes. That is why she is also interested in students motivation to learn, particularly in the domain of self-determination theory.

Lourdes Mata is an Assistant Professor at the ISPA – Instituto Universitário. She graduated in Educational Psychology and has a PhD in Children’s Studies by the Universidade do Minho. She studies the affective components of the learning processes, aiming to: identify and characterize individual beliefs and affective learning facets among students throughout schooling; and to analyze the quality of education and care contexts taking into account the organizational aspects and teachers’ and classrooms’ related variables.