Guest post by Matthew H. Loxton, a Principal Healthcare Analyst and Professional MAXQDA Trainer.

MAXQDA is well known as a leading qualitative data analysis (QDA) platform, and I have used it for typical research projects, such as analysis of organizations’ policies, healthcare improvement interviews, and focus groups. However, MAXQDA lends itself to use in “off-label” situations, where it can increase efficiency and effectiveness. In this blog, I will discuss how I have used MAXQDA for ranking resumes for grants and awards, analyzing and rating budget requests, and analyzing proposals and requests for proposals.

Using MAXQDA for Awarding Grants

As a director on the board of the Blue Faery Liver Association, I am called on to vote on the award of various research grants, and patient or caregiver awards. Typically, this involves comparing a number of applicants, and ranking them according to a number of preset criteria – but also being aware of unexpected characteristics that may promote or disqualify an application.

When you boil this process down, it looks very much like a qualitative analysis problem with a little mixed methods work sprinkled over the top.

I have developed a set code system for the grants and awards, including whether the application was relevant to hepatocellular cancer (HCC), whether it was within the past three years, and whether they have stated or implied a number of specific elements of HCC research, treatment, or coping. I import the texts into a MAXQDA Document Group for each applicant, whether they be audio, video, Word, or pdf files. I then code using the preset code system.

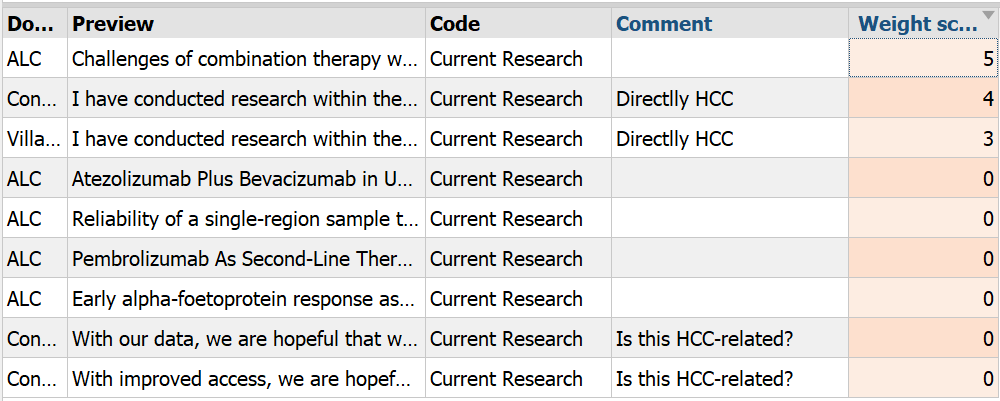

Once I have applied the codes, I use Modify Weight to assign a 1-5 scale to a single coded segment for each code and each applicant. This allows me to rank the applicants according to the score for each of the codes. I use the Edit Comment function to add any comments to specific coded segments. Sometimes these are questions that I need to ask other directors on the panel, or reminders to myself about the pertinence or value of a specific fact. Figure 1 shows how ranking can be done on codes for research relevance, as well as use of coded segment comments for communication.

Figure 1: Ranked Coded Segments

Figure 1: Ranked Coded Segments

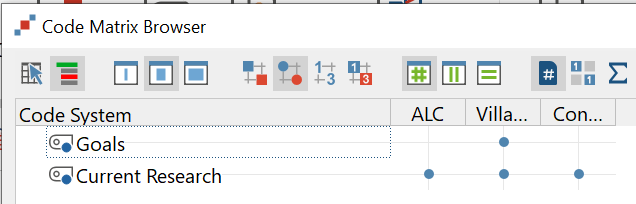

After this is done, I can use the Code Matrix Browser to see whether any applicants are missing codes, and therefore ineligible, but this also prompts me to double-check by reviewing their text again to verify that they did not match the missing codes. For example, in Figure 2, there are three applicants, and at this point, they have all shown evidence of current research, but only “Villa..” matched the goal-specific criteria. The results of this Code Matrix browser view prompted me to recheck the text to confirm.

Figure 2: Grant Application Code Matrix

Figure 2: Grant Application Code Matrix

At this point, I apply a grounded theory approach, looking for any emergent codes that might be unexpected or an overriding factor in selection. This allows for the possibility of an applicant meeting few or none of the preset criteria but having an unexpected quality that needs to be discussed with other panel members as a special case.

At the end, my output in Excel sheets and a summary has traceability back to the original text, and I can quickly evaluate applicants and provide the rest of the panel a ranked order list that can be incorporated with those of other members.

Using MAXQDA has reduced the time it takes me to evaluate grant and award applications, and my output is cleaner, more usable, and any decision can be traced back to the application text in a way that is more reliable and better justified.

Using MAXQDA for Proposals

Whether responding to a request for proposal (RFP), or evaluating responses to an RFP, there are known facts or criteria that can be represented by thematic codes, and emergent criteria can be represented by grounded theory codes.

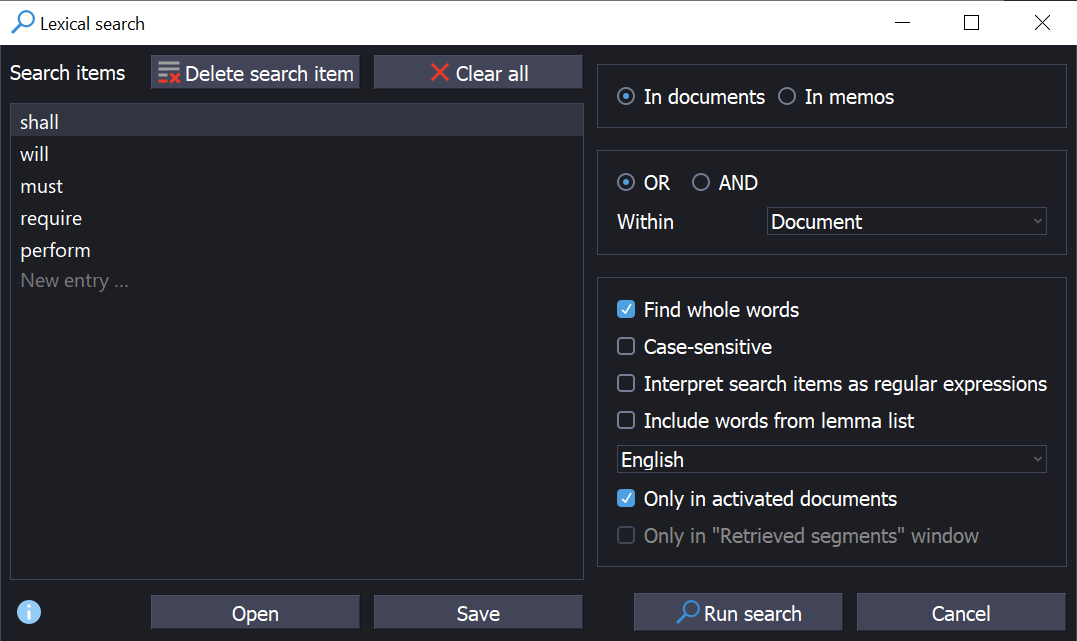

When I am writing a response to an RFP, I capture each requirement as a code by careful reading and qualitative analysis of the RFP text. Using lexical search and autocoding, I can quickly find all RFP sentences containing imperative words such as “shall”, “must”, and “will”. Typically, any sentence with those words is stating a requirement. Figure 3 shows the Lexical Search panel in use.

Figure 3: Lexical Search Criteria

Figure 3: Lexical Search Criteria

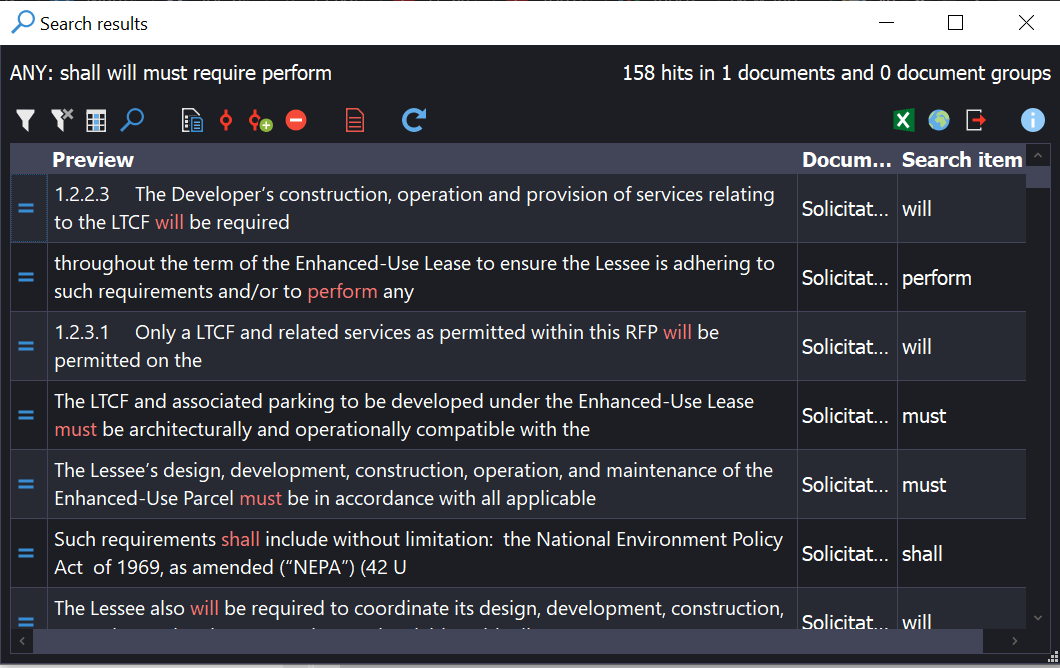

The results of the Lexical Search can be autocoded with requirements codes (Figure 4). I review my response by checking that each requirement code has been addressed in the text. This enables me to show a bid manager that I have addressed all the RFP requirements, and I can easily summon a matrix that shows exactly which text segments relate to which requirements. The requirements code list can be exported as an Excel sheet along with code memos that explain my understanding of why each is a requirement and the implications. The sheet is used in team discussions to reach agreement on a final list of proposal requirements.

Figure 4: Requirements Search Results

Figure 4: Requirements Search Results

In an inverse approach, when I am evaluating an RFP response, I start with codes for all the requirements I will evaluate, and then code text segments as I find matches that indicate compliance. Here too, a lexical search for relevant keywords greatly speeds up the process. Viewing the Document Portrait ordered by code and using the Code Matrix Browser enables me to quickly spot any bids that have failed to answer a “must have” criterion. I typically assign a weight number to each code on a 1-5 scale. This allows me to easily rank the bids by each criterion.

Using MAXQDA for Strategic Planning

For Strategic Planning, I use MAXQDA extensively for conducting Foresight analysis. I use the Web Collector to harvest web pages that are good signals of change or stasis, and import them and any other sources into MAXQDA. I use a predetermined code system that is developed around the Houston Foresight Framework, and code a section of text in each document that most represents the “lede” in the document. If the article is worthwhile but has no lede, I use the Paraphrase feature to write what I consider to be the most important facts and concepts.

Using MAXQDA as the analytical workbench for Foresight has allowed our team of analysts to collaborate effectively, capture signals more efficiently, and to develop scenarios and recommendations that have high construct validity and can be traced back to original sources with ease.

Conclusion

MAXQDA lends itself to review and evaluation of a variety of texts in which specified or emergent criteria must be applied, but which may have to be inferred by qualitative analysis. This has included evaluating grant proposals, resumes for job appointments, promotions, or transfers, RFP bids and evaluation, as well as strategic planning. Although far from the typical academic subject matter, all these things still have elements in common that make them amenable to being analyzed, ranked, or evaluated more easily using MAXQDA. It has saved me time and effort, and allowed me to turn out my responses faster, and with greater reliability, validity, and quality.

If you have used MAXQDA in a creative or non-academic way, please let us know!

About the Author

“>Matthew Loxton is a Principal Analyst at Whitney, Bradley, and Brown Inc. focused on healthcare improvement and Strategic Foresight. Matthew serves on the board of directors of the Blue Faery Liver Cancer Association, and holds a master’s degree in KM from the University of Canberra. Matthew regularly blogs for Physician’s Weekly and is active on social media related to healthcare improvement.

Other articles by Matthew Loxton in the MAXQDA Research Blog:

Conducting and Analyzing Virtual Focus Groups

Ready to try MAXQDA for yourself? Download the free trial to test MAXQDA 2020 for 30 days with no obligation:

“>Matthew Loxton is a Principal Analyst at Whitney, Bradley, and Brown Inc. focused on healthcare improvement and Strategic Foresight. Matthew serves on the board of directors of the

“>Matthew Loxton is a Principal Analyst at Whitney, Bradley, and Brown Inc. focused on healthcare improvement and Strategic Foresight. Matthew serves on the board of directors of the