The Intercoder Agreement function in MAXQDA works as follows:

- Two people code the identical document independently of each other with the agreed upon codes and code definitions. This can be done on the same computer or on different computers. The most important thing is that the two coders can not see how the other has coded. It makes sense to name each document in a way that makes it clear, who has coded which (e.g. “Interview1_Michael” and Interview1_Jessica.”

- To test the two documents, they must both be in the same MAXQDA project. This can for example be accomplished using the function Project > Merge projects, as long as both coders have been working with the same documents in each project.

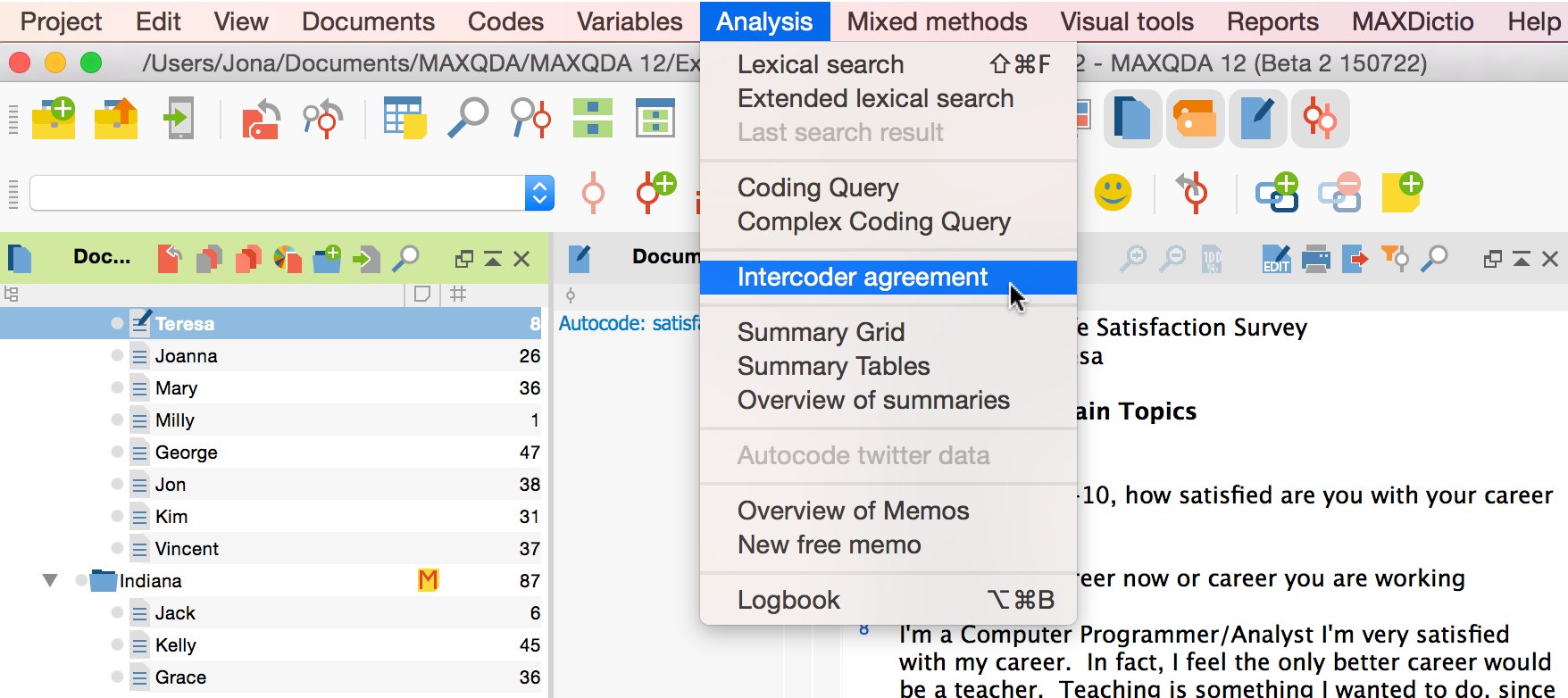

You can now start the Intercoder Agreement function by selecting it from the Analysis drop-down menu. The Intercoder Agreement will show where the assignment of codes by the two coders match and do not match.

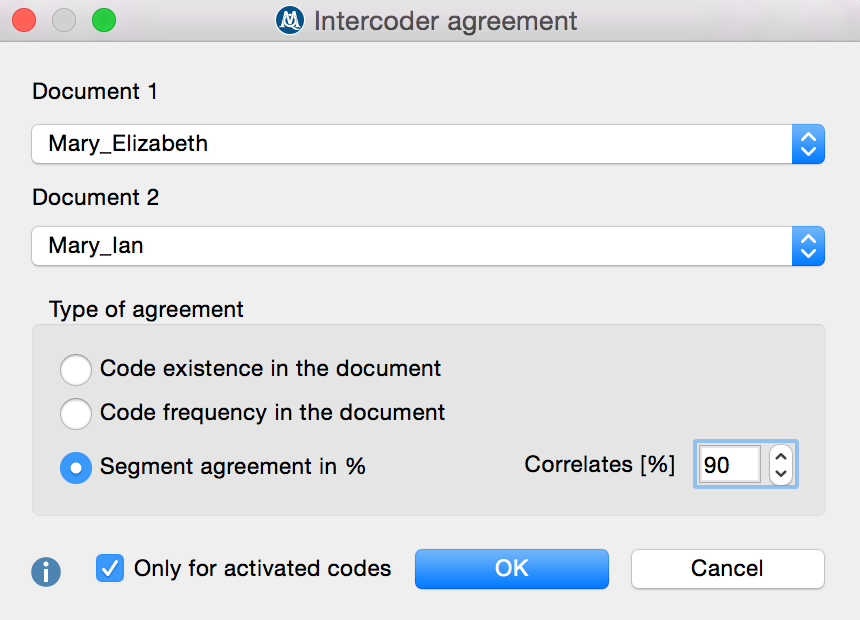

- Select the first coder’s document from the “Document 1” list and the second coder’s document from the “Document 2” list.

- If you only want to test the activated codes, you can check the box at the bottom left of the window next to Only for activated codes.

- You can then choose between three different analysis criteria for the agreement test:

Variation 1 – Code existence in the document

The criterion in this variation is the presence of each code in the document, meaning whether or not each code is found at least once in the document. This variation is most appropriate when the document is short, and there are many codes in the “Code System.”

Variation 2 – Code frequency in the document

The criterion in this variation is the number of times a code appears in each coder’s version of the document.

Variation 3 – Segment agreement in percent

In this variation, the agreement of each individual coded segment is measured. This is the most precise criterion and is used most often in qualitative research. You can set the percentage of agreement that is required in each segment for it to be considered in agreement (correlation). The tool takes each segment and evaluates the level of agreement between the two documents.

The screenshot above shows the Intercoder Agreement window, which shows that “Interview Mary _ Elizabeth” will be compared with “Interview Mary _ Ian.” The third variation is selected, meaning agreement will be tested at the segment level, and the required agreement percentage to be considered “in agreement” or “correlating” is 90.

Results for Variation 1

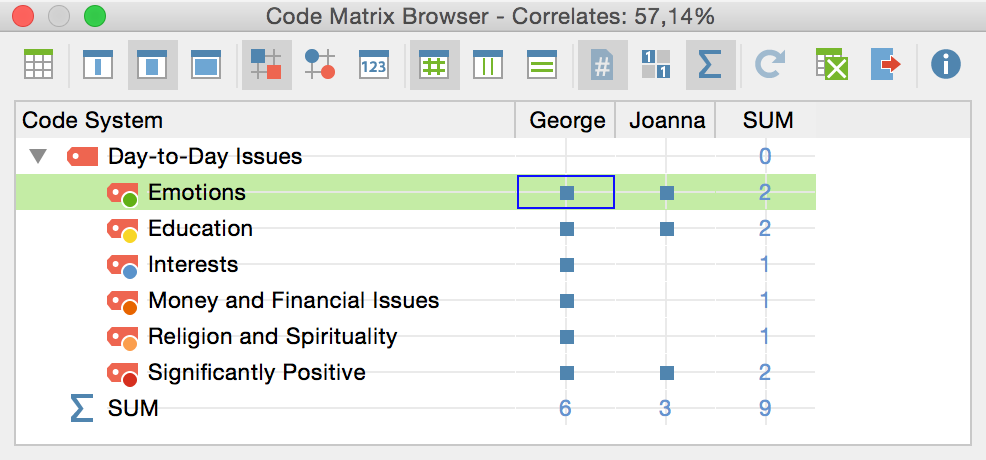

The Code Matrix Browser window will appear only for the two documents that are being compared. The Code Matrix Browser is set to visualize whether or not each of the codes exists in the two documents. If the code exists, a blue circle or square will be shown in that document’s column.

The screenshot shows the results. Codes such as “Day-to-Day Issues” and “Key Quotes” exist in both of the documents, but the code “Interview Guide Topics” only exists in the document coded by Ian.

Clicking on one of the blue squares selects the corresponding document in the “Document Browser” and the corresponding code in the “Code System.” Double-clicking on the squares activates the document and the code, and lists the segment in the “Retrieved segments” window.

At the top of the results window, you can see the percentage of agreement. In this case, there is a correlation of 57.14%. When calculating the percentage of agreement, the non-existence of a code in both documents is also considered a match.

Results for Variation 2

The results for the second variation are also shown in the Code Matrix Browser for the two documents to be compared. Results can be viewed either as symbols or as numbers. It is then fairly easy to see where there is agreement between the documents. The correlation percentage is shown again in at the top of the results window. For each code, the number of times it appears in each document is compared. If the number is the same, there is agreement. If the number is not the same, there is disagreement. It makes no difference in this case whether the difference between the two numbers is 1 or 100.

Percentage of Agreement

= # of agreements / (# of agreements + # of non-agreements)

= # of agreements / # of rows

Results for Variation 3

This is the most complicated variation, since intercoder agreement is tested on the segment level. If the first document has 12 coded segments and the second document has 14, there will be 26 tests carried out and presented in 26 rows of a table.

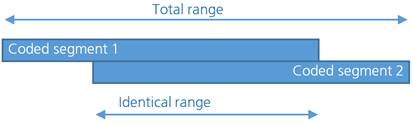

It is necessary to decide how similar the coded segments must be to be considered in agreement. As a match criterion, the percentage of the overlapping portion of the coded segments is defined. The percentage of agreement that results represents the relative share of the matching area in relation to the total area of two coded segments, which is illustrated schematically in the following diagram:

The percentage threshold can be set in the dialog window. The default value is 90%.

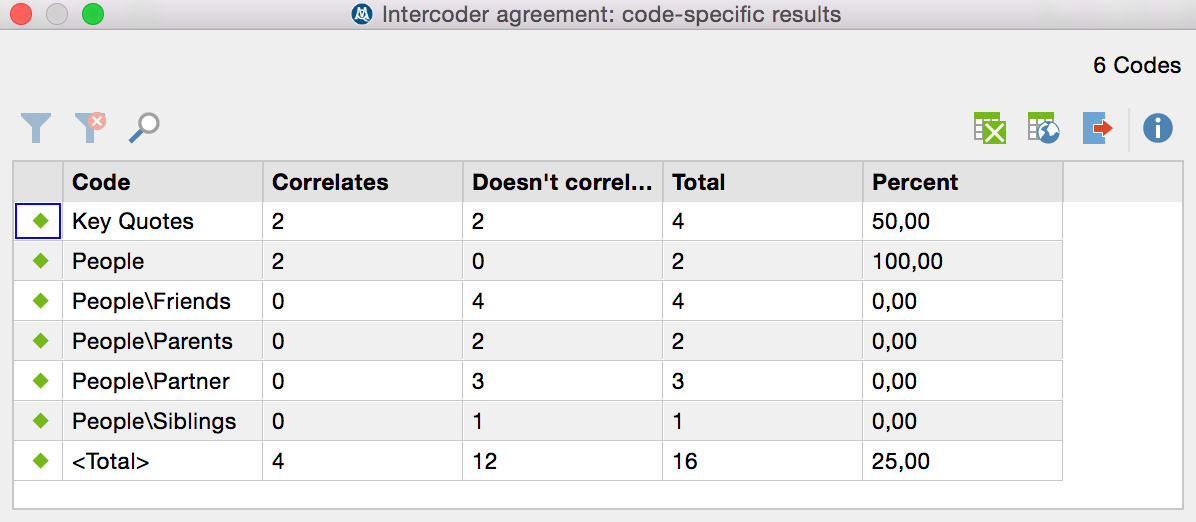

Two results tables are created: the code-specific results table and the detailed agreement table.

The Code-Specific Results Table

This table is made up of one row for each code that was included in the test. Codes that do not appear in either of the documents will be ignored. It shows the level of correlation between the two coders for each code. This can make clear, for example, if certain codes are in disagreement more than others. Every code is shown in a row with the number of times its use in the first document correlated with the use of the code in the second document, the number of times they did not correlate, and the total percentage of correlation.

The Detailed Agreement Table

The second results table allows you to see exactly which coder coded which segment and whether the coded segments correlate. A red icon in the first column shows that the coded segments do not agree. Clicking on the column header sorts the rows, showing all the non-correlating segments first, so the coders can analyze and discuss each of them step-by-step.

By double-clicking on the box in the “Document 1” column, you can open that document and call up the exact document segment in question in the “Document Browser.” Double-clicking on the square in the “Document 2” column brings up the segment in the Document Browser as well, showing the place where the correlating (or non-correlating) coded segment is. You can now very easily switch back and forth between the documents to analyze which of the coded segment variations is the most accurate.

Coefficient Kappa for exact segment matches

In qualitative analysis, the analysis of the Intercoder agreement serves primarily to improve coding instructions and individual codes. Nevertheless, it is also often desirable to calculate the percentage of agreement, particularly in view of the research report to be generated later. This percentage of agreement can be viewed in the code-specific results table above, in which individual codes as well as the ensemble of all codes are taken into account.

Researchers often express the desire to indicate not only percentage compliance rates in their research reports, but also to include chance-corrected coefficients. The basic idea of such a coefficient is to reduce the percentage of agreement to that which would be obtained in a random assignment of codes to segments.

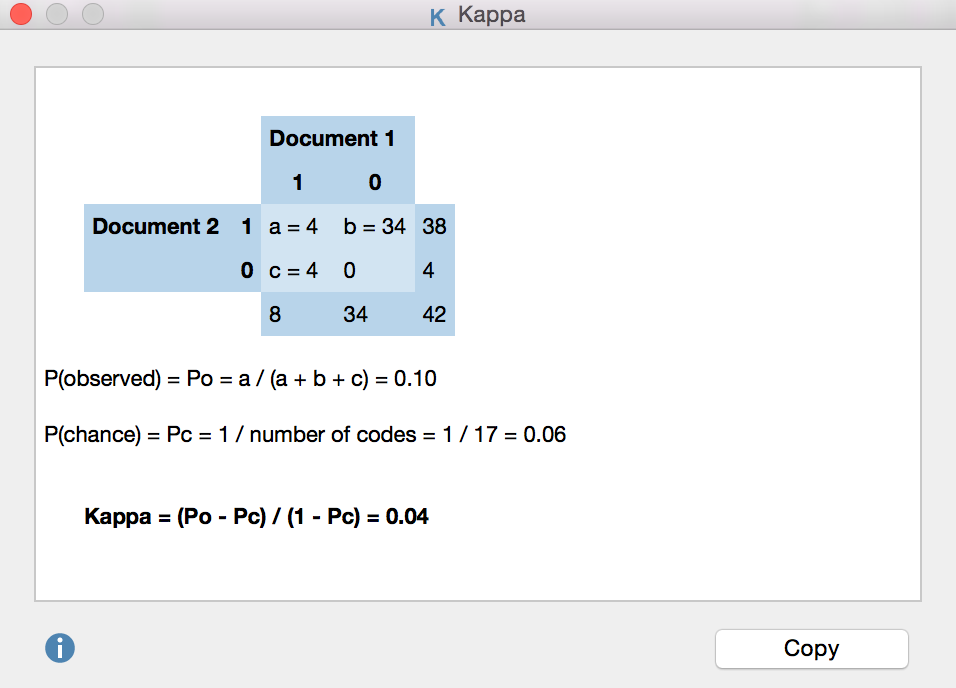

In MAXQDA, the commonly used coefficient “Kappa” can be used for this purpose: In the results table, click on the ![]() Kappa symbol to begin the calculation for the analysis currently underway. MAXQDA will display the following results table:

Kappa symbol to begin the calculation for the analysis currently underway. MAXQDA will display the following results table:

The number of codes that match is displayed in the upper left corner of the four-field table. In the upper right corner and the lower left corner you will find the non-matches, meaning one code, but not the other, has been assigned in a document. In MAXQDA the Intercoder agreement at the segment level takes into account only the segments to which at least one code has been assigned; therefore the cell on the lower right is, by definition, equal to zero (as document sections will be included in the analysis only if they are coded by both coders).

P(observed) corresponds to the simple percentage of agreement, as it was displayed in the <Total > line of the code-specific results table.

For the calculation of P(chance), or chance of agreement, MAXQDA employs a proposal made by Brennan and Prediger (1981), who dealt extensively with optimal uses of Cohen's Kappa and its problems with unequal edge sum distributions. In this calculation, the random match is determined by the number of different categories that have been used by both coders. This corresponds to the number of codes in the code-specific results table.

For the calculation of coefficients such as Kappa, segments must normally be defined in advance, and are provided with predetermined codes. In qualitative research, however, a common approach is that not to define segments a priori, but rather to assign the task to both coders of identifying all document points that they view as relevant, and to assign one or more appropriate codes. In this case, the probability that two coders code the same section with the same code would be lower, and hence Kappa would be greater. One could also argue that the probability of randomly occurring coding matches in a text with multiple pages and multiple codes is so insignificant that Kappa corresponds to the simple percentage agreement. In any case, the calculation should be carefully considered.